Embedded eyes: integrate eye-sights into objects

01 October 2011

Abstract of the Project

Everything people do, eyes are involved. What we see reveals our focus and attention. Eye movements provide a lot of information about what we do, such as reading, driving and other human activities. Eye tracking technologies have successfully been used for applications in research and laboratory settings. We envision that in the future eye tracking will be pervasively embedded in everyday interactive systems. Current eye tracking systems were often designed to provide robust and accurate pupil tracking and gaze estimation. To achieve high accuracy and speed, the systems use specialized hardware (e.g. cameras with zoom lens and infra-red light) [4]. The project’s goal is to build eye tracking systems that use sensors which we normally find in everyday computing devices [1, 2, 3]. The focus is on using video cameras for capturing eye movements and gaze estimation, without specialized hardware and infrared light sources. This project will investigate new computer vision and image processing techniques for eye tracking and gaze estimation, as well as develop novel gaze-based interface for spontaneous and casual human-computer interaction.

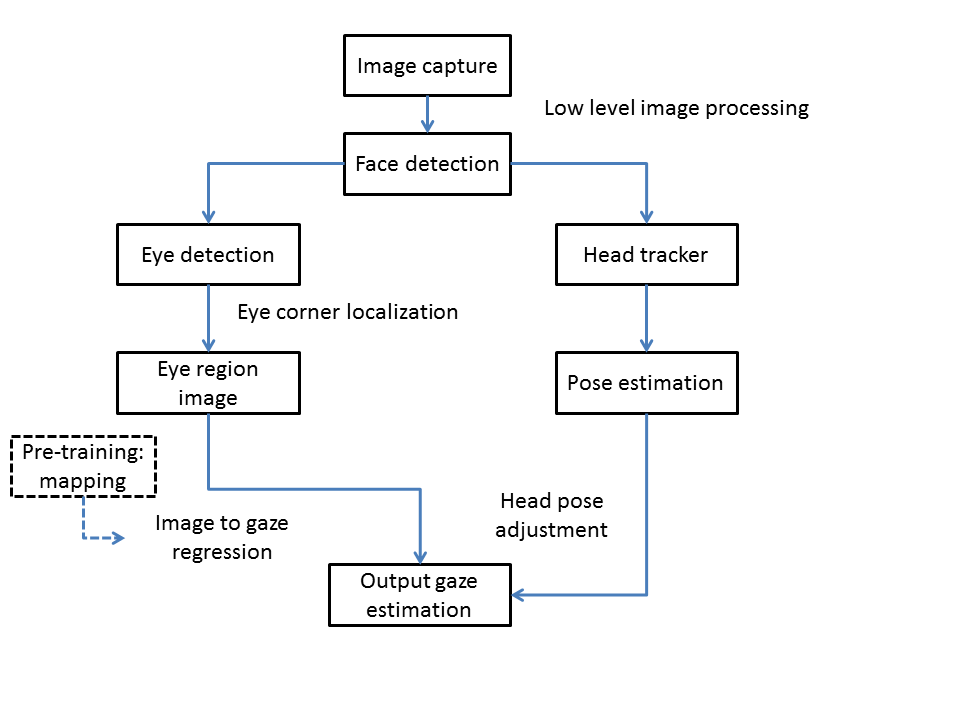

We proposed a gaze estimation method. The method uses computer vision techniques to analyse images captured from a video camera. Figure 1 provides an overview of the proposed gaze estimation system. A camera can be attached to any object (e.g. large display, laptop), and the camera streams real-time video. The system automatically detects the user standing in front of the camera. The system then pre-processes the original RGB images. The user's face is first detected and followed by localization of both eyes. The eye images are then extracted and use for determining gaze. The mapping of gaze coordinates can be learned through a pre-training session. For each incoming new image, the pre-trained model will be used to predict gaze coordinates. To accommodate natural head movement, the ultimate gaze output will be adjusted by the estimated head pose orientation.

Figure 1: Overview of the proposed gaze estimation system.

Research Achievements to date

- Data collection and analysis [1, 2]: A dataset of eye images/gaze coordinates pairs from 20 participants looking at 13 different locations on screen were collected. The dataset was analyzed offline to investigate novel image processing gaze estimation algorithm.

- Prototyping and user study [3]: A real-time gaze-based interaction prototype using a web camera was implemented. A user study on 14 participants was conducted to test the usability and robustness of the gaze-based interface.

References

[1] Y. Zhang, A. Bulling, and H. Gellersen. Discrimination of gaze directions using low-level eye image features. In Proceedings of PETMEI, pages 9–14. ACM, 2011.

[2] Y. Zhang, A. Bulling, and H. Gellersen. Towards pervasive gaze tracking with low-level image features. In Proceedings of the 7th International Symposium on Eye Tracking Research and Applications (ETRA 2012), 2012.

[3] Y. Zhang, A. Bulling, and H. Gellersen. Pervasive gaze-based interaction with public display using a webcam. In Adjunct Proceedings of the 10th International Conference on Pervasive Computing (Pervasive 2012) , June 2012.

[4] D. Hansen and Q. Ji. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(3):478–500, 2010.

Personal page: http://www.yanxiazhang.com/

Collaborations:

- Imperial College London, London, UK

- NoldusInformation Technology, The Netherlands